Notes on Agent Evals

Researchers are still figuring out how to evaluate language models to find out what they can and can't do, and to track their progress over time. As we build more generalist systems, however, we're going to need even more sophisticated evaluation techniques.

Thanks to Ollie Jaffe, James Stirrat-Ellis and David Mlcoch for feedback on this post.

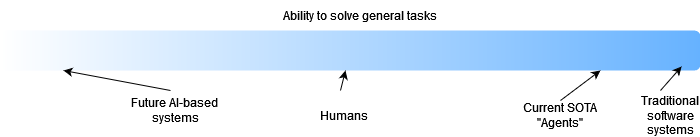

Consider this spectrum:

You can't apply the same techniques used to test deterministic software systems to humans. CI pipeline tests and unit tests aren't the right framework for evaluating our performance.

Usually our testing of software systems roughly corresponds to putting in $value and checking that we get $output. But running this testing paradigm on humans is difficult and results in things like LeetCode style interview questions, which are contrived and map poorly to the actual work of an engineer.

If someone is doing a highly repetitive job like widget manouvering, you might be able to use metrics like "how many widgets moved from location A to B", but a job like software engineering is too complex to evaluate in this way. Testing whether a developer who is confronted with some situation X outputs exactly Y is a fool's errand, and counting lines of code contributed results in instant catastrophic Goodharting. There are many ways to complete an engineering task with different tradeoffs, and the "correct" answer is often subtle, impossible to judge, or context dependent.

As generalist agents become useful for solving real tasks, we will need new ways to evaluate them. We'll define a generalist agent as something like an LLM with tool usage (minimal internet access, code execution) running inside a for-loop on a computer. Consider this following tasks we could give this agent:

write a web scraper to identify the best investment opportunities fill $role at $company by finding the most suitable candidates online and hiring them identify vulnerabilities in a company's network and write a report on them find the most suitable location for my new $structure given $spec and write a 30 page executive report on it. Today's LLM evaluations are rising to the challenge. Early eval suites like Winograd and HellSwag did a good job of testing the capabilities of LLMs on multiple choice or verifiable questions, but they were limited in scope. New eval suites like SWE-Bench provide verification mechanisms that don't rely on a single right answer, in this case by leveraging existing unit tests to see if the code written to fix a given GitHub issue is good or not.

But where is the trajectory of increasingly general evaluation techniques taking us?

One way of reasoning about this is to think about how we eval humans* who do intellectually demanding work like running a company or making new scientific contributions. It seems like we use an unstructured combination of socially constructed metrics like stock price, citation count and even more nebulous metrics like social standing and perceived skill to evaluate them.

What are the equivalent metrics we would use for a generalist non-human agent? We can't keep writing deterministic eval suites. We're probably going to need vibes-based mechanisms that work for arbitrary domains.

One key reason for this is that test suites are very brittle. Consider an LLM pipeline that does research on potential clients and then engages in cold outbound email as well as customer development over time. You might have a suite of email chains that historically were considered good, but if you change the underlying prompt to make the agent more aggressive/friendly or change the way it uses internet resources, the old set of "good" email chains might not accurately reflect the agent's current outputs. The same problem applies if you try to change the domain you're operating in from B2B SaaS HR tech to B2G widget manufacturing. You'd need a new set of email chains that were considered good for that domain.

A better approach might be to try to divine the revenue impact of that agent. If the agent is able to generate leads and close deals, we can track the revenue generated by that agent and use that as a metric. This feels like a sparse reward problem and might be too noisy to be useful, though.

Another, perhaps better way of solving this would be to have a human in the loop who could give signals as to their perceived performance of that agent. By upvoting or downvoting certain outputs, and potentially even providing feedback on or modifying the email** that the agent wrote (preferably before it is sent so that we can intervene before it causes damage to relationships), we can start to collect signal as to the efficacy of our agent and guide the agent towards outputs that are more likely to be useful.

In doing this we also gather signal from human experts that can be used to train more effective systems. Ranking between LLM outputs if all of the outputs are bad is not useful, but having a domain-expert human analyse and rate/modify the agents output would be very useful. Consider that one could allow the overseeing human to modify the proposed action or text from the agent, and that the human steers the agent towards a satisfactory end result (instead of it decohering or otherwise failing at its task). The resulting chain of reasoning and actions from input task to successful completion is a valuable source of training data that might not have otherwise existed if there was not an efficient way of giving feedback to and agent during its execution cycle.

Collecting this signal gives us the ability to start elo ranking agents on specific tasks too, in a similar manner to https://lmsys.org/, but agnostic to domain. You could A/B test your proposed agent on

This is a messy approach, requires human oversight and judgement, and is far from perfect, but it's applicable to ~arbitrary domains, gives us instant signal, and doesn't require us to come up with a comprehensive set of tests beforehand. It allows us to build and iterate on agentic pipelines in a loop, tweaking the prompt and tool usage until we get a result that is good enough to be useful, using human feedback as our guide.

This should not just be a process used for offline training for LLMs. All businesses need evaluation suites to test if their code and agents are working as intended. When you update a critical prompt in your B2B Agent-as-a-Service pipeline, you need to know that you haven't just decimated the efficacy of your client's agents downstream. Currently, you'd likely break all of their tests but you'd have no way of knowing whether it was for the better or not.

If those downstream clients (or their end users) could give instant feedback on their agent (potentially before it is actually deployed), you'd be able to identify, iterate on and fix problems introduced into agentic systems without having to wait for a new test suite to be written and rolled out. This seems like it would allow us to decouple our agent pipeline from a rigid test suite, and deploy it into arbitrary domains with a more robust understanding of how it is actually performing in the wild.

The obvious next step is to then take this human preference data and train models to predict what a human would rate an agent's action or output.